Identifying anomalies in real-time is critical for detecting potential security breaches, application failures, or unexpected behaviors in systems. Traditional anomaly detection approaches often fall short due to the dynamic and evolving nature of data streams. This is where Amazon SageMaker’s Random Cut Forest (RCF) model comes in. RCF is designed for anomaly detection in high-dimensional data streams, making it ideal for detecting outliers in log data. This article will guide you through the process of training an RCF model in SageMaker, deploying real-time endpoints, and integrating Lambda for real-time anomaly detection in streaming logs.

Training the RCF Model

The first step in detecting anomalies is to build and train a Random Cut Forest model in Amazon SageMaker. RCF is an unsupervised learning algorithm specifically designed for anomaly detection in streaming data.

- Dataset Preparation

Before training the model, ensure you have preprocessed log data ready. Each record in the dataset should be a numerical vector representing features of the logs (e.g., response time, status codes, log levels, etc.).

Sample log data:

0.45, 200, 1200

0.50, 404, 1350

0.55, 500, 900

These represent response time, status code, and request duration.

2. Training the RCF Model

In SageMaker, start by specifying the RandomCutForest algorithm in your training job. You can create the training job using SageMaker’s Python SDK.

import sagemaker

from sagemaker import RandomCutForest

from sagemaker.inputs import TrainingInput

# Create SageMaker session

session = sagemaker.Session()

# Define the RCF estimator

rcf = RandomCutForest(

role="arn:aws:iam::<role-name>",

instance_count=1,

instance_type="ml.m5.large",

num_samples_per_tree=512,

num_trees=50,

sagemaker_session=session,

)

# Define the location of training data (in S3)

training_data = TrainingInput("s3://<bucket-name>/log-data.csv", content_type="text/csv")

# Train the model

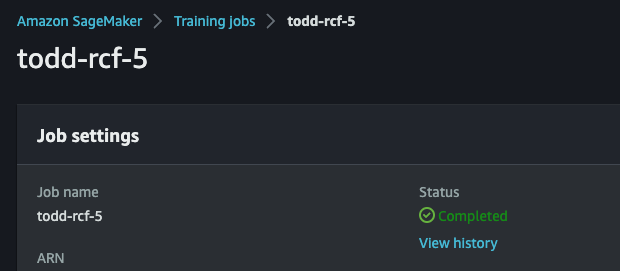

rcf.fit(training_data)

- The

num_treesparameter determines how many trees are used in the random forest. num_samples_per_treedefines how many samples each tree processes, affecting how granular anomaly detection is.

Once the training is complete, SageMaker will automatically store the trained model in an S3 bucket.

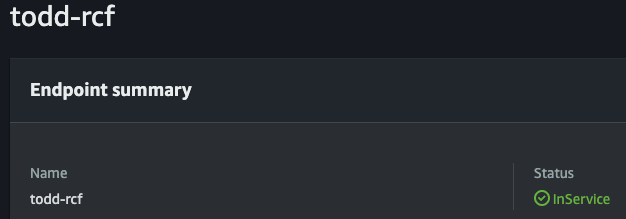

Deploying SageMaker Endpoints

After training the RCF model, the next step is to deploy the model as a real-time inference endpoint. The endpoint will receive log data and return an anomaly score, which indicates how likely the data is to be an anomaly.

- Create an Endpoint Configuration

Define the configuration for your SageMaker endpoint:

from sagemaker.model import Model

# Create the model

model = rcf.create_model()

# Deploy the model to a real-time endpoint

predictor = model.deploy(

initial_instance_count=1,

instance_type="ml.m5.large",

endpoint_name="rcf-anomaly-endpoint"

)

- Invoke the Endpoint

After deploying the endpoint, you can start sending real-time log data to the endpoint for anomaly detection.

- Example input data for the RCF model:

test_data = [[0.48, 500, 1250]]

response = predictor.predict(test_data)

anomaly_score = response['scores'][0]

print(f"Anomaly score: {anomaly_score}")

- The higher the anomaly score, the more likely it is that the data point is an outlier.

Invoking SageMaker from Lambda

To enable real-time anomaly detection in streaming logs, integrate SageMaker with AWS Lambda. The Lambda function will process logs, invoke the SageMaker endpoint, and then store or take action based on the anomaly score.

- Setting up the Lambda Function

The Lambda function will be triggered by incoming Kafka logs or other data sources. Inside the function, you will invoke the SageMaker endpoint to get anomaly scores.

- Example Lambda function code:

import json

import boto3

runtime = boto3.client('runtime.sagemaker')

def lambda_handler(event, context):

# Extract log data from event (assuming log data is in event['log_data'])

log_data = event['log_data']

# Prepare the data for SageMaker

payload = json.dumps(log_data)

# Invoke SageMaker endpoint

response = runtime.invoke_endpoint(

EndpointName="rcf-anomaly-endpoint",

ContentType="application/json",

Body=payload

)

# Parse the response to get the anomaly score

result = json.loads(response['Body'].read().decode())

anomaly_score = result['scores'][0]

# Log or take action based on the anomaly score

if anomaly_score > 3.0: # Threshold for anomaly

print(f"Anomaly detected: {anomaly_score}")

else:

print(f"Normal log data: {anomaly_score}")

return {

'statusCode': 200,

'body': json.dumps({'anomaly_score': anomaly_score})

}

- Triggering the Lambda Function

The Lambda function can be triggered by any data source, such as AWS Kinesis or a Kafka topic on EKS. Each log entry will invoke the SageMaker endpoint and return an anomaly score for real-time detection.

Key Takeaways

- RCF for Streaming Data: The Random Cut Forest model is highly effective for anomaly detection in high-dimensional and streaming data, making it ideal for log analysis.

- Real-Time Integration: By deploying SageMaker endpoints and invoking them through AWS Lambda, you can achieve real-time anomaly detection with minimal operational overhead.

- Seamless AWS Integration: The integration between SageMaker, Lambda, and other AWS services (such as Kafka, Kinesis, or DynamoDB) allows for building robust, scalable, and cost-effective real-time data processing pipelines.

Using SageMaker’s RCF model and Lambda, you can easily implement real-time anomaly detection in your log processing systems. The flexibility and scalability of this setup enable you to detect outliers in streaming logs quickly and take appropriate action to mitigate potential issues.