Legacy systems, particularly those built on COBOL, continue to power critical business operations in enterprises worldwide. However, these systems often lack the ability to process and enhance data using modern AI techniques. By integrating LLMs within a Kubernetes-based data pipeline, organizations can enrich structured data in real-time, unlocking new insights and improving decision-making.

This article explores how enterprises can augment COBOL-based data transformation workflows with AI-powered enrichment, using an approach implemented in an EKS Data Processing Pipeline. We will cover:

- The architecture of real-time AI enrichment for legacy data.

- Implementation using AWS services, Kubernetes, and LLMs.

- A step-by-step guide with Terraform, Kubernetes manifests, and Python scripts.

- The business impact of real-time AI-enhanced data processing.

The Problem: Static Data in Legacy Systems

Legacy workloads rely on structured formats such as CSV files, which contain raw transactional or operational data. These files, while useful, lack contextual insights, making real-time decision-making difficult. Businesses need a way to enrich these datasets dynamically, without modifying the underlying COBOL applications.

The Solution: AI-Powered Data Enrichment

By leveraging an AWS EKS cluster and a Kubernetes CronJob, COBOL-based batch processing can be combined with an LLM service for real-time enrichment. This solution enables:

- Automated transformation of structured data using COBOL.

- Real-time AI augmentation using LLMs for deeper insights.

- Scalability and reliability through cloud-native infrastructure.

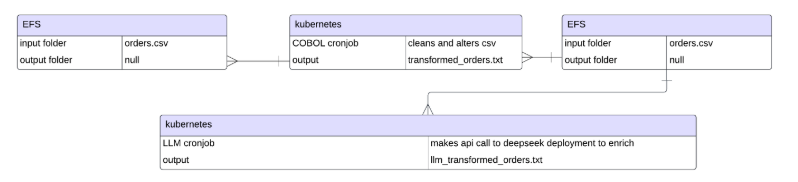

Architecture Overview

The following diagram illustrates the real-time AI enrichment pipeline:

- COBOL Data Processing: A Kubernetes CronJob executes a COBOL program that transforms CSV data.

- Persistent Storage: The processed CSV files are stored in AWS EFS.

- AI Enrichment: A containerized LLM service reads the transformed CSV files, applies AI-driven enhancements, and writes enriched data back to storage.

- Deployment & Orchestration: ArgoCD automates deployments, and Terraform provisions the necessary AWS infrastructure.

Implementation Guide

Step 1: Deploying Infrastructure with Terraform

We use Terraform to provision the required AWS infrastructure, including EKS, EFS, and IAM roles.

module "eks" {

source = "terraform-aws-modules/eks/aws"

version = "20.31.6"

cluster_name = local.environment

authentication_mode = "API_AND_CONFIG_MAP"

cluster_version = var.eks_cluster_version

cluster_endpoint_private_access = true

cluster_endpoint_public_access = true

cluster_ip_family = "ipv4"

cluster_addons = {

aws-ebs-csi-driver = {

service_account_role_arn = module.ebs_csi_irsa_role.iam_role_arn

most_recent = true

}

aws-efs-csi-driver = {

service_account_role_arn = module.efs_csi_irsa_role.iam_role_arn

most_recent = true

}

coredns = {

most_recent = true

}

kube-proxy = {

most_recent = true

}

vpc-cni = {

most_recent = true

before_compute = true

configuration_values = jsonencode({

env = {

ENABLE_PREFIX_DELEGATION = "true"

WARM_PREFIX_TARGET = "1"

}

})

}

}

iam_role_additional_policies = {

AmazonEC2ContainerRegistryReadOnly = "arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly"

}

enable_cluster_creator_admin_permissions = true

cluster_tags = var.tags

vpc_id = module.vpc.vpc_id

subnet_ids = module.vpc.private_subnets

eks_managed_node_groups = {

gpu_node_group = {

ami_type = "AL2_x86_64_GPU"

instance_types = [var.eks_node_gpu_instance_type]

min_size = 1

max_size = 3

desired_size = 1

use_latest_ami_release_version = true

ebs_optimized = true

enable_monitoring = true

block_device_mappings = {

xvda = {

device_name = "/dev/xvda"

ebs = {

volume_size = 75

volume_type = "gp3"

encrypted = true

delete_on_termination = true

}

}

}

labels = {

gpu = true

"nvidia.com/gpu.present" = true

}

pre_bootstrap_user_data = <<-EOT

#!/bin/bash

set -ex

# Install dependencies

yum install -y cuda

# Add the NVIDIA package repositories

distribution=$(. /etc/os-release;echo $ID$VERSION_ID)

curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.repo | sudo tee /etc/yum.repos.d/nvidia-docker.repo

# Install the NVIDIA container runtime

sudo yum install -y nvidia-container-toolkit

EOT

}

node_group = {

ami_type = "AL2_x86_64"

instance_types = [var.eks_node_instance_type]

min_size = 1

max_size = 5

desired_size = 1

use_latest_ami_release_version = true

ebs_optimized = true

enable_monitoring = true

block_device_mappings = {

xvda = {

device_name = "/dev/xvda"

ebs = {

volume_size = 75

volume_type = "gp3"

encrypted = true

delete_on_termination = true

}

}

}

labels = {

gpu = false

}

iam_role_additional_policies = {

AmazonSSMManagedInstanceCore = data.aws_iam_policy.AmazonSSMManagedInstanceCore.arn

eks_efs = aws_iam_policy.eks_efs.arn

}

tags = var.tags

}

}

}

module "efs" {

source = "terraform-aws-modules/efs/aws"

version = "1.6.5"

name = var.environment

creation_token = var.environment

encrypted = true

mount_targets = { for k, v in zipmap(local.availability_zones, module.vpc.private_subnets) : k => { subnet_id = v } }

security_group_description = "${var.environment} EFS security group"

security_group_vpc_id = module.vpc.vpc_id

security_group_rules = {

vpc = {

description = "Ingress from VPC private subnets"

cidr_blocks = module.vpc.private_subnets_cidr_blocks

}

}

tags = var.tags

}

Step 2: Running COBOL Data Processing as a Kubernetes CronJob

Once the infrastructure is in place, we define a Kubernetes CronJob to execute the COBOL transformation.

apiVersion: batch/v1

kind: CronJob

metadata:

name: cobol-transform

spec:

schedule: "0 * * * *"

jobTemplate:

spec:

template:

spec:

containers:

- name: cobol-transform

image: myrepo/cobol-transform:latest

volumeMounts:

- name: efs-storage

mountPath: /data

restartPolicy: OnFailure

volumes:

- name: efs-storage

persistentVolumeClaim:

claimName: efs-claim

Step 3: AI Enrichment with a Large Language Model

The AI enrichment step uses an LLM deployed in a Kubernetes pod, which reads processed CSV data, enriches it with contextual insights, and writes it back to storage.

import os

import requests

PROMPT = """You are an AI assistant that analyzes customer order data and provides insights.

Task: Process the input orders and enrich them with:

1. Customer Category

2. Personalized Recommendation

Categorization Rules:

- VIP Customer:

* Total spend over 50 units

* Order frequency of 5 or more

* Offer a 10% discount

- Standard Customer:

* Total spend between 10-50 units

* Order frequency between 2-4

* No special offer

- New Customer:

* Total spend less than 10 units

* Order frequency of 1

* Suggest an upsell to a different product

Input Format:

Order: [Order Number] | [Customer Name] | [Address] | [Product] | [Quantity] | Frequency: [Order Frequency]

Output Format:

Order: [Original Order Details] | Category: [Customer Category] | [Optional Recommendation]

Please process the following orders:

"""

def read_input_file(filepath):

with open(filepath, 'r') as f:

return f.read().strip()

def process_with_llm(input_text):

url = "http://deepseek-service.deepseek.svc.cluster.local:11434/api/generate"

payload = {

"model": "deepseek-r1:7b",

"prompt": PROMPT + input_text,

"stream": False,

}

headers = {"Content-Type": "application/json"}

response = requests.post(url, json=payload, headers=headers)

if response.status_code == 200:

return response.json().get("response", "")

else:

raise Exception(f"LLM request failed with status {response.status_code}: {response.text}")

def main():

input_file = os.environ.get("TRANSFORMED_FILE", "/mnt/efs/output/transformed_orders.txt")

output_file = os.environ.get("LLM_TRANSFORMED_FILE", "/mnt/efs/output/llm_transformed_orders.txt")

input_text = read_input_file(input_file)

llm_response = process_with_llm(input_text)

with open(output_file, 'w') as f:

f.write(llm_response)

print(f"Processed orders saved to {output_file}")

if __name__ == "__main__":

main()

Before & After: The Power of AI Augmentation

The illustration below demonstrates how AI enrichment transforms structured data:

First, looking at Order 1234:

- John Doe spent $3 on Widget A.

- His order frequency is once.

Since his total spend is below $50 and he ordered only once, he falls under the New Customer category. I should suggest an upsell to a different product for him.

Next, Order 5678:

- Jane Smith spent $100 on Widget B with high frequency.

Her spending exceeds $50, and she placed orders five times (since the frequency is missing but implied as multiple). This meets the criteria for a VIP customer. She deserves a special offer of 10% discount.

Then, Order 9101:

- Mike Johnson spent $5 on Widget A once.

His total spend is low ($5) and he ordered just once, which classifies him as a New Customer again. Similar to John Doe, I'll suggest an upsell recommendation for another product.

I should ensure each order is processed correctly according to the rules without mixing up any details. Also, since the frequency in Order 9101 was mentioned as "Frequency:", maybe it's just incomplete and assumed once. That’s important because it affects the categorization.

</think>

Here are the categorized orders with personalized recommendations:

1. **Order: 1234**

- **Customer Name:** John Doe

- **Category:** New Customer

- **Recommendation:** Suggest an upsell to a different product.

2. **Order: 5678**

- **Customer Name:** Jane Smith

- **Category:** VIP Customer

- **Recommendation:** Offer a 10% discount on the next purchase.

3. **Order: 9101**

- **Customer Name:** Mike Johnson

- **Category:** New Customer

- **Recommendation:** Suggest an upsell to a different product.

Business Impact of AI-Powered Data Enrichment

Implementing AI-driven data enrichment offers enterprises:

Enhanced Data Usability: AI transforms structured data into actionable insights.

Cost Efficiency: Reduces manual data processing overhead.

Operational Agility: Enables real-time decision-making.

By integrating AI-powered enrichment within a Kubernetes-based pipeline, enterprises can modernize legacy workloads without full rewrites, bridging the gap between traditional COBOL applications and modern AI-driven analytics.

Final Thoughts

Legacy systems don’t have to remain static. By adopting Kubernetes, LLMs, and cloud-native automation, enterprises can enhance the value of their legacy data, reduce costs, and improve business intelligence.