Model evaluation and tuning are important steps in building an effective churn prediction model. SageMaker Canvas provides several metrics and tools, such as the Confusion Matrix, F1 Score, Precision, Recall, and AUC-ROC, to assess model performance. This guide explores these metrics, explains the importance of the confusion matrix, and provides practical tuning techniques to optimize model accuracy and reliability in churn prediction.

Understanding Evaluation Metrics

F1 Score

The F1 Score is a harmonic mean of Precision and Recall. It's particularly useful for churn prediction, where false positives (predicting churn when the customer stays) and false negatives (predicting no churn when the customer leaves) have different business costs.

- Formula:

F1 = 2 * (Precision * Recall) / (Precision + Recall) - Use Case: An F1 Score is ideal when you need a balance between Precision and Recall, as it penalizes extreme values in either metric.

Precision

Precision measures the accuracy of positive predictions (i.e., identifying churned customers).

- Formula:

Precision = True Positives / (True Positives + False Positives) - Interpretation: A high Precision indicates that when the model predicts churn, it's usually correct. This is critical if the cost of mislabeling non-churn customers as churners is high.

Recall

Recall measures the ability of the model to capture all actual churn cases.

- Formula:

Recall = True Positives / (True Positives + False Negatives) - Interpretation: High Recall means the model captures most churners, even if it occasionally mislabels non-churn customers. This is crucial when missing a true churn case has significant repercussions.

AUC-ROC

AUC-ROC (Area Under the Receiver Operating Characteristic Curve) measures the model's ability to distinguish between churn and non-churn cases across different thresholds.

- Range: AUC values range from 0 to 1, with values closer to 1 indicating better distinction between classes.

- Interpretation: A high AUC-ROC score implies that the model effectively separates churners from non-churners, regardless of specific threshold settings.

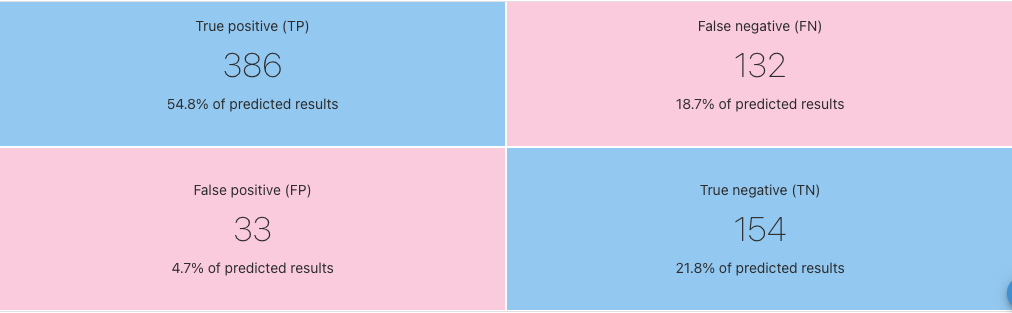

Confusion Matrix Analysis

The Confusion Matrix is a valuable tool for visualizing model performance, showing the counts of True Positives (TP), True Negatives (TN), False Positives (FP), and False Negatives (FN). Here's an example of how to interpret these values in the context of churn prediction:

- True Positives (TP): Customers predicted to churn who actually churned.

- True Negatives (TN): Customers predicted to stay who actually stayed.

- False Positives (FP): Customers predicted to churn who actually stayed (type I error).

- False Negatives (FN): Customers predicted to stay who actually churned (type II error).

Business Impact

- High FP (False Positives): If high, this could lead to unnecessary retention efforts on customers unlikely to churn.

- High FN (False Negatives): If high, the model fails to capture potential churners, leading to lost revenue.

Analyzing the confusion matrix allows you to adjust the model based on the cost associated with each type of error.

Optimizing Recall and Precision

Balancing Recall and Precision is crucial in churn prediction. Here are techniques to adjust each metric:

Techniques to Improve Recall

- Lower the Decision Threshold: Reducing the threshold for predicting churn increases Recall, catching more potential churners.

- Oversampling: Use techniques like SMOTE (Synthetic Minority Over-sampling Technique) to balance the dataset if churn cases are a minority class.

- Synthetic Data: Leverage SageMaker Canvas's synthetic data generation to expand the dataset and enhance model robustness.

Techniques to Improve Precision

- Increase the Decision Threshold: Raising the threshold for predicting churn reduces False Positives, enhancing Precision.

- Feature Engineering: Remove irrelevant features and improve feature selection to make the model more confident in its predictions.

- Cross-Validation: Use k-fold cross-validation to ensure the model is generalizing well to new data.

These techniques can be adjusted iteratively in SageMaker Canvas to find the optimal balance for your specific business context.

Synthetic Data Generation

SageMaker Canvas offers synthetic data generation, creating additional test records that simulate real-world data patterns. This can help improve model robustness, especially when dealing with limited data.

Benefits of Synthetic Data

- Data Augmentation: Adds variability to the dataset, improving generalization.

- Enhances Model Evaluation: Synthetic data helps test the model on unseen patterns, making it more resilient to edge cases.

- Balances Dataset: If churn cases are rare, synthetic data can artificially increase churn cases, improving model training.

By leveraging synthetic data, SageMaker Canvas enables a more stable model that performs well in production settings.

Practical Tips for Tuning Models in SageMaker Canvas

Use Hyperparameter Tuning

SageMaker Canvas allows for hyperparameter tuning, which can optimize the model by adjusting parameters such as learning rate, depth, and dropout rates.

- Experiment with Different Parameters: Try various values for learning rate, regularization, and depth for tree-based models like XGBoost.

- Use Early Stopping: Early stopping halts training if the model stops improving, preventing overfitting.

- Evaluate Feature Importance: Analyze feature importance in SageMaker Canvas to understand which factors influence churn prediction the most.

Set up a CI/CD Pipeline for Continuous Tuning

- Automation: Use AWS CodePipeline to automate retraining whenever new data is added.

- Monitoring: Set up CloudWatch alerts to monitor model performance over time, ensuring consistent results.

Regularly Re-evaluate the Confusion Matrix

Periodically analyze the confusion matrix as part of your model evaluation to understand where the model is misclassifying. This will help you fine-tune the model for better performance.

Effective evaluation and tuning in SageMaker Canvas ensure your churn prediction model is accurate, robust, and aligned with business needs. By understanding key metrics, leveraging synthetic data, and applying practical tuning techniques, you can optimize the model's performance for high-stakes applications in customer retention. Regularly re-evaluate and adjust based on confusion matrix insights to maintain a model that reliably identifies churners, helping drive strategic decisions in customer retention.