Overview

Data preparation is a foundational step in any machine learning project, impacting both model performance and interpretability. Properly formatted and well-structured data is essential for building robust models and producing reliable predictions. In this project, we leverage Google BigQuery for both data storage and model training. This approach minimizes data movement, leverages GCP’s scalability, and keeps our entire machine learning pipeline centralized.

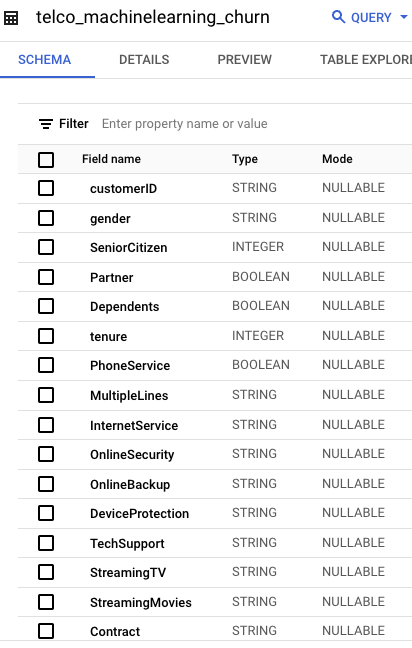

Data Schema

To build an effective churn prediction model, the data schema must capture relevant customer attributes that correlate with churn likelihood. We define each column’s type and mode based on customer characteristics commonly linked to churn in telecommunications, such as tenure, contract type, and monthly charges. Ensuring accurate data types, like STRING for categorical fields and FLOAT for numeric fields, helps BigQuery ML to interpret and process the data correctly.

Schema Fields and Types

Each column in our schema has been chosen to provide insights into customer behaviors:

- customerID (STRING): A unique identifier for each customer.

- gender (STRING): Demographic data, useful for segmentation.

- SeniorCitizen (INTEGER): Encodes customer’s seniority, affecting churn patterns.

- Contract (STRING): Identifies contract type, impacting churn likelihood.

- MonthlyCharges (FLOAT): Financial metric, often a direct churn indicator.

- Churn (BOOLEAN): The target variable, representing whether the customer has churned.

This schema creates a structured, organized dataset, making it easy to build and evaluate models.

Data Ingestion with BigQuery Jobs

Once the data schema is defined, we upload the raw customer data from Google Cloud Storage (GCS) to BigQuery using a BigQuery load job. This approach efficiently imports CSV data, ensuring consistency and data integrity.

Terraform Configuration for Data Loading

The following Terraform configuration sets up a BigQuery load job that ingests data from a CSV file in GCS into the BigQuery table. Key options include source_format for the CSV format, write_disposition to overwrite existing data, and null_marker to handle null values.

resource "google_bigquery_job" "load_customer_data" {

job_id = "${var.project}_${random_string.this.result}"

location = var.region

load {

destination_table {

project_id = google_bigquery_table.this.project

dataset_id = google_bigquery_table.this.dataset_id

table_id = google_bigquery_table.this.table_id

}

source_uris = ["gs://${google_storage_bucket.data_bucket.name}/${google_storage_bucket_object.customer_data.name}"]

source_format = "CSV"

write_disposition = "WRITE_TRUNCATE"

skip_leading_rows = 1

autodetect = true

field_delimiter = ","

null_marker = "\\N"

}

labels = var.labels

depends_on = [google_storage_bucket_object.customer_data]

}

Explanation

- source_uris: Points to the GCS bucket where the CSV data is stored.

- source_format: Specifies that the input format is CSV.

- write_disposition: Ensures that any previous data in the table is overwritten.

- null_marker: Defines a marker for null values to avoid issues when processing incomplete data.

- skip_leading_rows: Skips the first row if it contains column headers.

By automating the data load process with Terraform, we create a reproducible workflow that can be triggered consistently.

Common Errors and Troubleshooting

Data ingestion can sometimes result in errors. Below are common issues and how to address them:

- Schema Mismatch: Ensure the data types in the CSV file match the BigQuery schema exactly.

- NULL Handling: If null values aren't recognized, adjust the

null_markersetting. - Invalid Field Delimiters: Ensure the

field_delimiterin the BigQuery job matches the CSV format (e.g., commas for CSV). - Permission Errors: Verify that the service account has access to both GCS and BigQuery.

If errors occur, check the BigQuery job logs in the console for detailed messages. Making sure your data meets schema expectations helps avoid these common pitfalls.

Code Snippets for Data Validation

After loading data, validating it in BigQuery ensures that it meets the schema requirements and accurately reflects the raw data. The following SQL query checks the schema and reviews the first few rows of data.

'''

SELECT *

FROM <DATASET_NAME>.<TABLE_NAME>

LIMIT 10;

'''

Running this query confirms that all columns are loaded correctly and allows you to visually inspect sample data, verifying that columns such as customerID, MonthlyCharges, and Churn are correctly populated.

In this article, we’ve covered the steps for loading and preparing customer data for use in BigQuery ML. By setting up a robust data ingestion pipeline, we lay the foundation for training an accurate churn prediction model.