In customer churn prediction, selecting the right algorithm is the only way to achieve a balance between accuracy, computational efficiency, and business impact. AWS SageMaker Canvas offers various algorithms, including XGBoost and Multilayer Perceptron (MLP), both of which excel in binary classification tasks like churn prediction. This article provides an in-depth comparison of these two algorithms, covering their training processes, performance metrics, and the scenarios in which each algorithm is most suitable.

Algorithm Selection

XGBoost (Extreme Gradient Boosting)

XGBoost is a popular tree-based ensemble learning algorithm known for its speed and accuracy in structured/tabular data. It excels at binary classification by iteratively combining weak learners (decision trees) to minimize prediction errors.

- Advantages: Fast, highly interpretable, and efficient in handling large datasets.

- Limitations: May struggle with unstructured data and complex, high-dimensional feature spaces.

Multilayer Perceptron (MLP)

MLP is a type of neural network model designed to recognize patterns in data. It has multiple layers of neurons that process inputs in a non-linear way, making it versatile for a variety of tasks, including binary classification.

- Advantages: Powerful in capturing non-linear relationships and complex feature interactions.

- Limitations: Computationally expensive, often requires more data and tuning to avoid overfitting.

Comparison Summary

XGBoost is generally preferred for tabular data due to its speed and interpretability, whereas MLPs may perform better with complex data but at a higher computational cost. SageMaker Canvas allows easy access to both, enabling business users to experiment with each model type.

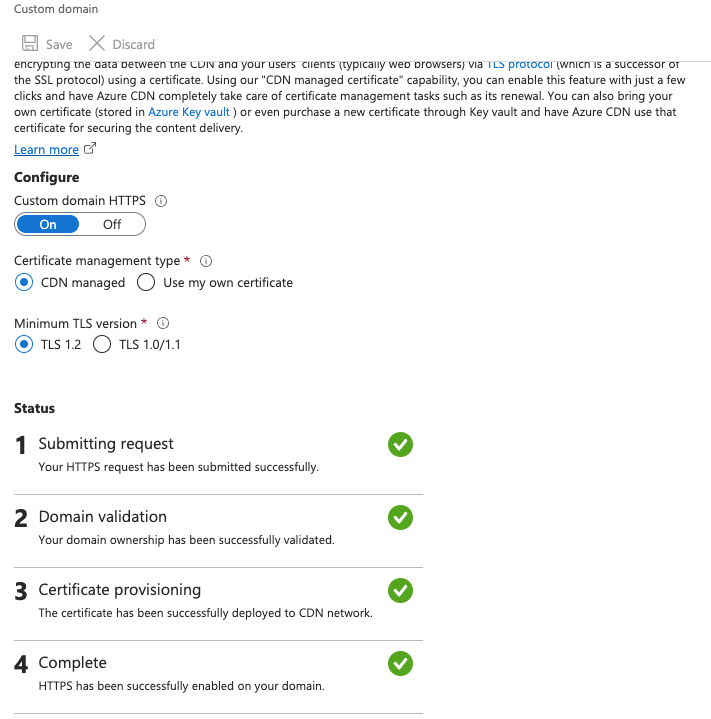

Training Process in SageMaker Canvas

SageMaker Canvas simplifies model training by automating many parts of the ML pipeline. Here’s how it handles training for XGBoost and MLP:

Data Preparation

- Data Splitting: SageMaker Canvas typically uses a 90/10 split for training and testing.

- Feature Engineering: Automatically applies transformations like normalization and one-hot encoding where necessary.

Hyperparameter Tuning

Both models undergo automatic hyperparameter optimization in SageMaker Canvas:

- XGBoost: Parameters such as learning rate, max depth, and number of estimators are tuned to prevent overfitting while maximizing accuracy.

- MLP: Tuning involves layers, neurons per layer, and dropout rates, which impact the model's ability to generalize.

Training Execution

SageMaker Canvas trains both models iteratively, allowing users to track progress and view metrics as the models improve with each pass. The system provides a straightforward interface for monitoring, regardless of the model complexity.

Performance Analysis

Metrics Overview

SageMaker Canvas evaluates models based on key metrics, critical for assessing the effectiveness of each algorithm in churn prediction.

- F1 Score: A balanced measure of Precision and Recall, particularly useful when false positives and false negatives carry different costs.

- Precision: Indicates the accuracy of positive predictions (churn cases).

- Recall: Measures the algorithm's ability to capture all actual churn cases.

- AUC-ROC: The Area Under the Receiver Operating Characteristic Curve indicates the model's ability to distinguish between churn and non-churn.

Metric Differences Between XGBoost and MLP

- XGBoost: Typically performs well on F1 Score, Precision, and AUC-ROC due to its optimized, tree-based approach.

- MLP: Often achieves higher Recall, capturing complex patterns but may require more tuning to avoid false positives and computational overhead.

Results Comparison

The following summarizes the results of each model on the churn prediction dataset, highlighting their strengths and limitations.

XGBoost Results

- F1 Score: High, indicating balanced Precision and Recall.

- Precision: High, making it suitable when false positives are costly.

- Recall: Adequate, though sometimes lower than MLP for complex patterns.

- AUC-ROC: Consistently high, reflecting strong binary classification performance.

MLP Results

- F1 Score: Competitive with XGBoost, though more sensitive to overfitting.

- Precision: Slightly lower than XGBoost, especially if not well-tuned.

- Recall: Higher than XGBoost, beneficial when false negatives (missed churn cases) are critical.

- AUC-ROC: Generally high, though affected by hyperparameter tuning and training data volume.

When to Choose Each Algorithm

- XGBoost: Use when model interpretability, speed, and performance on structured/tabular data are priorities.

- MLP: Ideal for datasets with complex feature interactions where a higher recall is essential, and computational resources are available.

Considerations

Cost-Performance Trade-Offs

SageMaker Canvas abstracts much of the model complexity, but the cost implications of choosing MLP over XGBoost are still significant.

- XGBoost: Computationally efficient, leading to lower costs, especially in production environments.

- MLP: Computationally expensive, especially if many layers and neurons are used, requiring careful cost management if used at scale.

Model Complexity and Interpretability

XGBoost is more interpretable due to its tree structure, making it easier to understand feature importance and decision-making. MLPs, on the other hand, act as "black-box" models, making them harder to interpret but potentially more powerful on complex data.

Selecting the right algorithm for churn prediction depends on the dataset characteristics and the business requirements around accuracy, speed, and interpretability. SageMaker Canvas provides an accessible way to explore these algorithms, enabling business users to make informed decisions based on performance metrics and computational considerations.